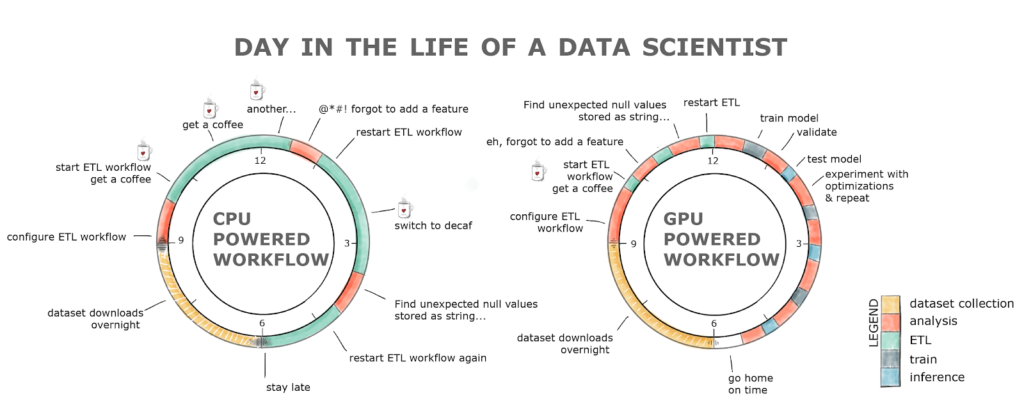

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

H2O.ai Releases H2O4GPU, the Fastest Collection of GPU Algorithms on the Market, to Expedite Machine Learning in Python | H2O.ai

RAPIDS: Open GPU Data Science | Scipy 2019 Tutorial | Scopatz, Becker, Kraus, Gama Dessavre - YouTube

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

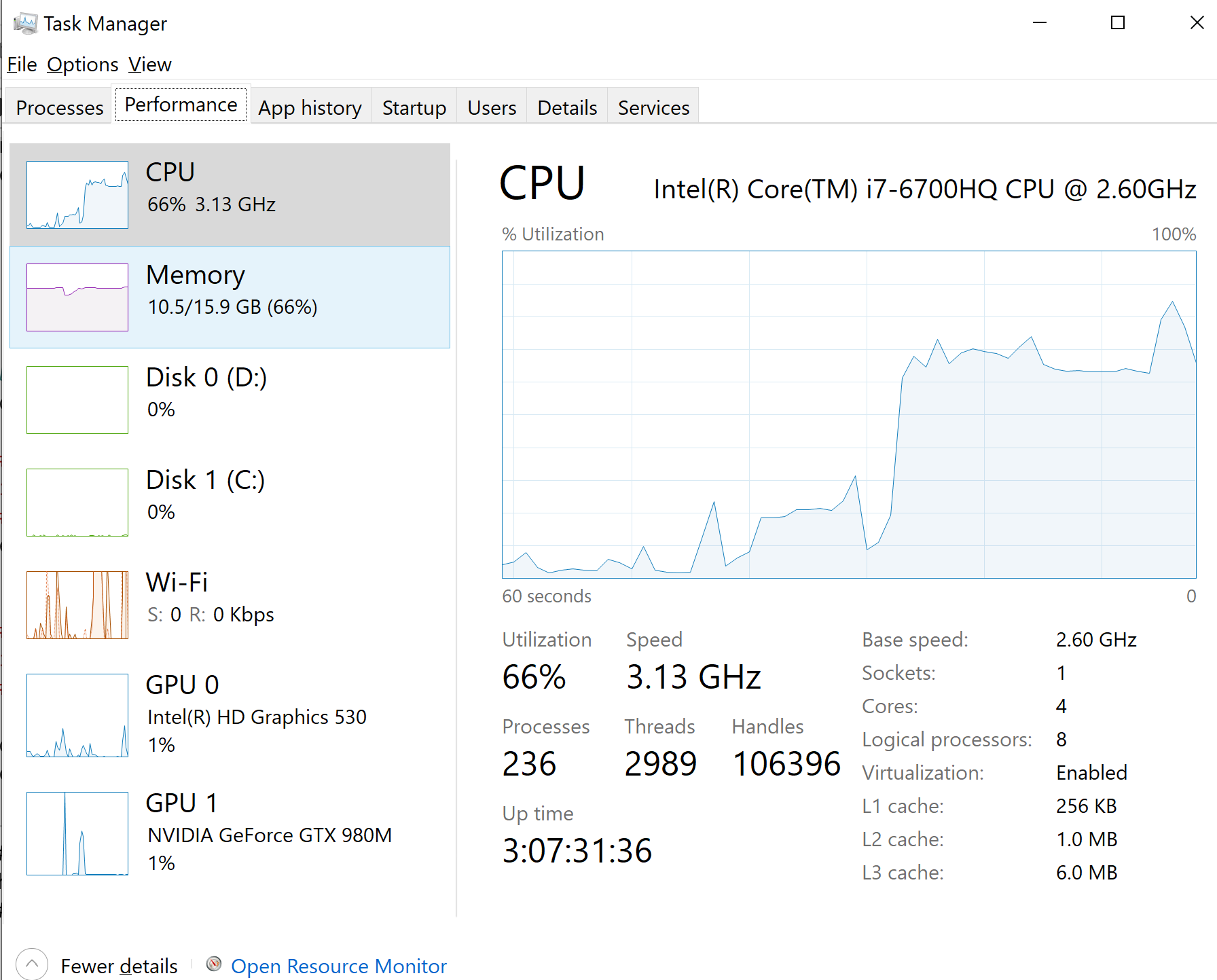

Using the Python Keras multi_gpu_model with LSTM / GRU to predict Timeseries data - Data Science Stack Exchange

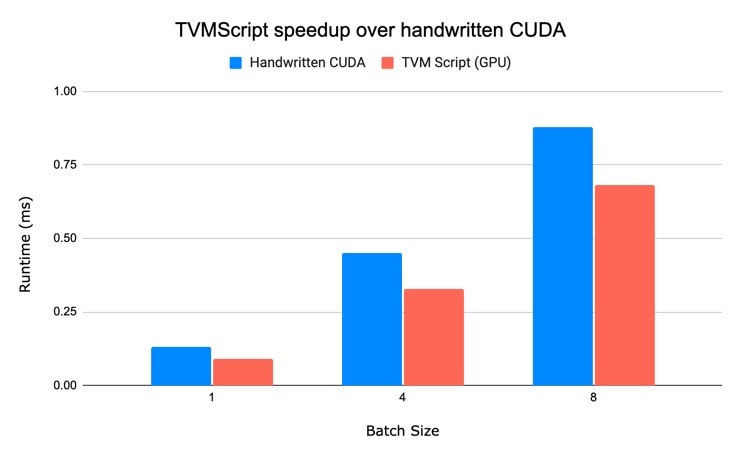

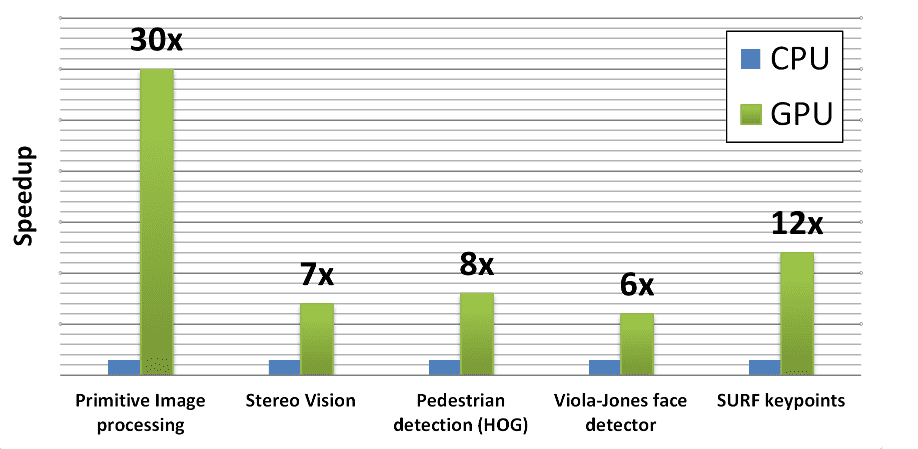

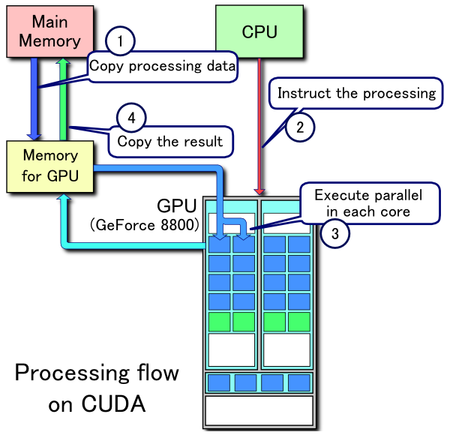

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

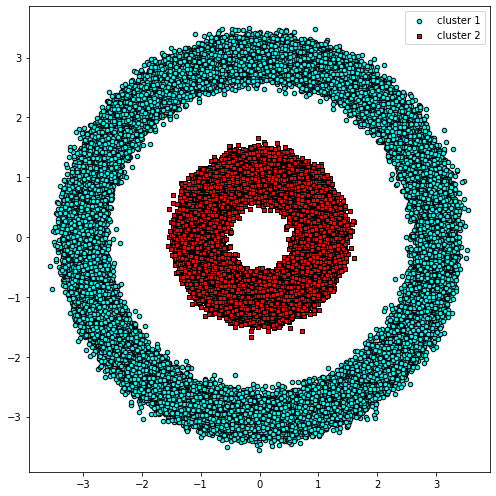

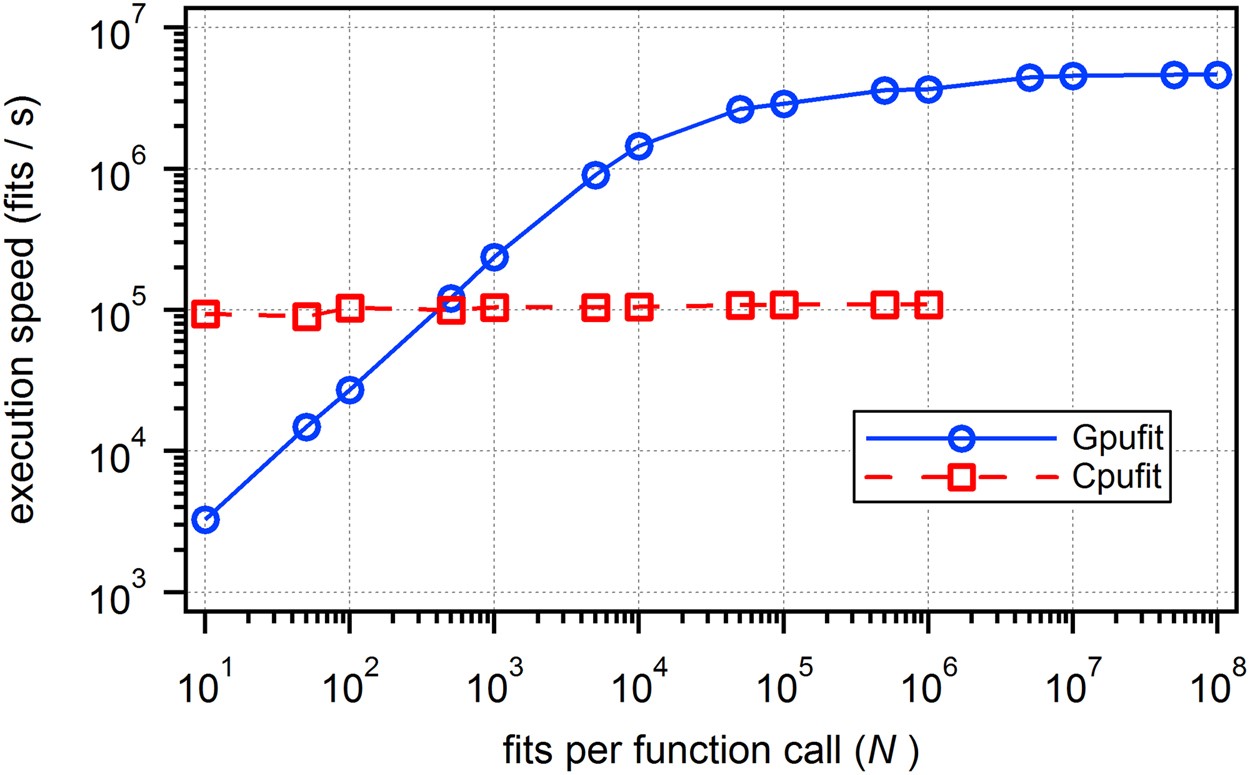

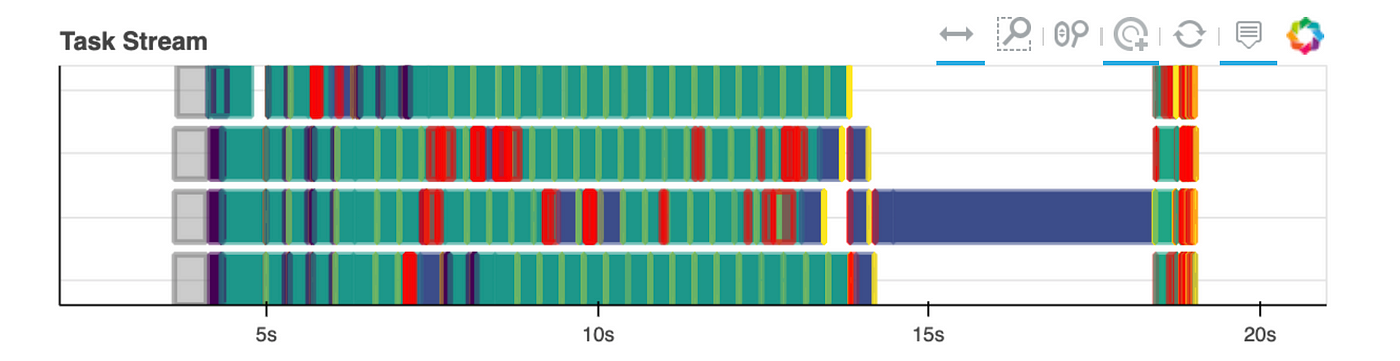

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence